How To

Configuring Sendmail for UF’s SMTP

Our Ubuntu web host, hosted with OSG, was not able to send mail (using PHP mail) outside of UF. An OSG tech said our From: header should be a valid address at UF (check) and that the logs at smtp.ufl.edu showed those messages never made it there.

The solution was to configure sendmail to use smtp.ufl.edu as the “smart” relay host (as it’s described in the config file):

$ sudo nano /etc/mail/sendmail.cf

Ctrl+w and search for smart. On the line below, add smtp.ufl.edu directly after DS with no space. The result should be:

# "Smart" relay host (may be null)

DSsmtp.ufl.eduCtrl-x and save the buffer.

Restart sendmail: $ sudo /etc/init.d/sendmail restart

As soon as I did this, the queued messages were sent out. I still don’t know why messages to ufl.edu succeeded while others sat in the queue.

Get higher quality images within printed web pages

Due to web images being optimized for on-screen display (let’s say 96 DPI), images on printed pages are usually blurry, but they don’t have to be:

- Start with a high-resolution image. E.g. 2000 x 1000.

- Save a version with dimensions that fit well in your printed layout when placed in an IMG element. E.g. 300 x 150.

- In your print CSS, fix the size of the IMG element in pixels to match the dimensions in (2).

- Using the original image, recreate the image file in (2) with significantly larger dimensions (identical width/height ratio). E.g. 600 x 300.

The Good News: The printed page will have an identical layout as in (2), but with a higher quality image. This is because–according to my testing–even browsers that use blocky “nearest neighbor” image scaling for screen will scale nicely for print.

You Probably Don’t Need ETag

(updated 3/4 to include the “Serving from clusters” case)

As I see more server scripts implementing conditional GET (a good thing), I also see the tendency to use a hash of the content for the ETag header value. While this doesn’t break anything, this often needlessly reduces performance of the system.

ETag is often misunderstood to function as a cache key. I.e., if two URLs give the same ETag, the browser could use the same cache entry for both. This is not the case. ETag is a cache key for a given URL. Think of the cache key as (URL + ETag). Both must match for the client to be able to create conditional GET requests.

What follows is that, if you have a unique URL and can send a Last-Modified header (e.g. based on mtime), you don’t need ETag at all. The older HTTP/1.0 Last-Modified/If-Modified-Since mechanism works just fine for implementing conditional GETs and will save you a bit of bandwidth. Opening and hashing content to create or validate an ETag is just a waste of resources and bandwidth.

When you actually need ETag

There are only a few situations where Last-Modified won’t suffice.

Multiple versions of a single URL

Let’s say a page outputs different content for logged in users, and you want to allow conditional GETs for each version. In this case, ETag needs to change with auth status, and, in fact, you should assume different users might share a browser, so you’d want to embed something user-specific in the ETag as well. E.g., ETag = mtime + userId.

In the case above, make sure to mark private pages with “private” in the Cache-Control header, so any user-specific content will not be kept in shared proxy caches.

No modification time available

If there’s no way to get (or guess) a Last-Modified time, you’ll have use ETag if you want to allow conditional GETs at all. You can generate it by hashing the content (or using any function that changes when the content changes).

Serving from clusters

If you serve files from multiple servers, it’s possible that file timestamps could differ, causing Last-Modified dates sent out to shift and needless 200 responses when a client hits a different server. Basically, if you can’t trust your mtime to stay synched (I don’t know how often this is an issue), it may be better to place a hash of the content in an ETag.

In any case using ETag, when handling a conditional GET request (which may contain multiple ETag values in the If-None-Match header), it’s not sufficient to return the 304 status code; you must include the particular ETag for the content you want used. Most software I’ve seen at least gets this right.

I got this wrong, too.

While writing this article I realized my own PHP conditional GET class used in Minify, has no way to disable unnecessary ETags (when the last modified time is known).

Secure Browsing 101

Dad forwarded an e-mail that tried to simplify the difference between HTTP and HTTPS and I wanted to add a bit to that.

Think of HTTPS as a secure telephone line

No one can eavesdrop, but don’t assume HTTPS is “secure” unless you know who’s on the other end. Evil and good-but-poorly-managed web sites can use HTTPS just as easily as Amazon.

E.g. a phishing e-mail could tell you to “login to eBay” at: https://ebay.securelogin.ru/. That URL is HTTPS, but is still designed to fool you. Always check the domain!

Public WiFi Networks

Most wifi networks in public places (and, sadly, in homes) are not password protected and therefore highly insecure. Any information (passwords, URLs) that travel over HTTP in these locations can be trivially captured by anyone with a laptop; assume that some kid sitting at Starbucks can see anything you do over HTTP. Don’t sign in to sites over HTTP and, in fact, if you’re already logged in, log out out of HTTP sites before you browse them.

By the way, please, please, put a password on your wireless network at home. Otherwise the kid at Starbucks can easily park next door and spy on you from his car.

Webmail: Use Gmail or check it at home

As of today, Gmail is the only web-based e-mail site that allows all operations over HTTPS (as long as you use the HTTPS URL). Yahoo! and the others log you in over HTTPS, but your viewed and composed messages are sent over HTTP. Don’t view or send e-mail with sensitive info on public wifi networks (unless you’re on secure Gmail). The same goes for messages sent within social networks like MySpace and Facebook. If you’re at Starbucks, assume someone else can read everything you can.

Since many web accounts are tied to your e-mail, the security of your e-mail account should be your top priority. Also consider a strong and unique password that you don’t use on any other site.

On a wired connection, HTTP is mostly safe

Since HTTP is not encrypted, a “man-in-the-middle” could theoretically see you browsing just like at Starbucks, but these are so rare that no one I know has ever heard of one occurring in real life. It’s best practice for sites to use HTTPS for all sensitive operations like signing in, but I don’t fret it when my connection is wired and my home wifi is password-protected.

This is the tip of the web security iceberg, but these practices are essential in my opinion.

Getting phpQuery running under XAMPP for Windows

While trying to run the initial test scripts included with phpQuery 0.9.4 RC1, I got the following warning:

Warning: domdocument::domdocument() expects at least 1 parameter, 0 given in C:\xampp\htdocs\phpQuery-0.9.4-rc1\phpQuery\phpQuery.php on line 280

This is strange because DOMDocument’s constructor has only optional arguments.

As it turns out, XAMPP for Windows ships PHP with the old PHP4 “domxml” extension enabled by default (appears as extension=php_domxml.dll in \xampp\apache\bin\php.ini). This deprecated extension has a somewhat less-documented OO API, with domxml_open_mem() being accessible via new DOMDocument(). I.e. domxml hijacks PHP5’s DOMDocument constructor.

Comment extension=php_domxml.dll out with a semicolon, restart Apache and phpQuery seems to work as designed.

Minify 2.1 on mrclay.org

A new release of Minify is finally out, and among several new features is the “min” application that makes 2.1 a snap to integrate into most sites. This post walks through the installation of Minify 2.1 on this site.

Pre-encoding vs. mod_deflate

Recently I configured Apache to serve pre-encoded files with encoding-negotiation. In theory this should be faster than using mod_deflate, which has to re-encode every hit, but testing was in order.

My mod_deflate setup consisted of a directory with this .htaccess:

AddOutputFilterByType DEFLATE application/x-javascript

BrowserMatch \bMSIE\s[456] no-gzip

BrowserMatch \b(SV1|Opera)\b !no-gzipand a pre-minified version of jquery-1.2.3 (54,417 bytes) saved as “before.js”. The BrowserMatch directives ensured the same rules for buggy browsers as used in the type-map setup. The compression level was left at the default, which I assume is optimal for performance.

The type-map setup was as described here. “before.js” was identical to the mod_deflate setup and included separate files for the gzip/deflate/compress-encoded versions. Each encoded file was created using maximum compression (9) since we needn’t worry about encoding efficiency.

I benchmarked with Apache ab and ran 10,000 requests, 100 concurrently. To make sure the encoded versions were returned I added the request header Accept-Encoding: deflate, gzip. The ab commands:

ab -c 100 -n 10000 -H "Accept-Encoding: deflate, gzip" http://path/to/mod_deflate/before.js > results_deflate.txt

ab -c 100 -n 10000 -H "Accept-Encoding: deflate, gzip" http://path/to/statics/before.js.var > results_statics.txtPre-encoding kicks butt

| method | requests/sec | output size (bytes) |

|---|---|---|

| mod_deflate | 187 | 16053 |

| pre-encoding + type-map | 470 | 15993 |

Apache can serve a pre-encoded version of jQuery is 2.5x as fast as it can using mod_deflate to compress on-the-fly. It may also be worth mentioning that mod_deflate chooses gzip over deflate, sending a few more bytes.

Basically, if you’re serving large textual files with Apache on a high-traffic site, you should work pre-encoding and type-map configuration into your build process and try these tests yourself rather than just flipping on mod_deflate.

mod_deflate may win for small files

Before serving jQuery, I ran the same test with a much smaller file (around 2k) and found that, at that size, mod_deflate actually outperformed type-map by a little. Obviously somewhere in-between 2K and 54K the benefit of pre-encoding starts to pay off. It probably also depends on compression level, compression buffer sizes, and a bunch of other things no-one wants to fiddle with.

Apache HTTP encoding negotiation notes

Now that Minify 2 is out, I’ve been thinking of expanding the project to take on the not-straightforward task of serving already HTTP encoded files on Apache and allowing Apache to negotiate the encoding version; taking CGI out of the picture would be the natural next step toward serving these files as efficiently as possible.

All mod_negotiation docs and articles I could find applied mainly to language negotiation, so I hope this is helpful to someone. I’m using Apache 2.2.4 on WinXP (XAMPP package), so the rest of this article applies to this setup.

Type-map over MultiViews

I was first able to get this working with MultiViews, but everywhere I’ve read says this is much slower than using type-maps. Supposedly, with MultiViews, Apache has to internally pull the directory contents and generate an internal type-map structure for each file then apply the type-map algorithm to choose the resource to send, so creating them explicitly saves Apache the trouble. Although one is required for each resource, Minify will eventually automate this.

Setup

To simplify config, I’m applying this setup to one directory where all the content-negotiated files will be served from. Here’s the starting .htaccess (we’ll add more later):

# turn off MultiViews if enabled

Options -MultiViews

# For *.var requests, negotiate using type-map

AddHandler type-map .var

# custom extensions so existing handlers for .gz/.Z don't interfere

AddEncoding x-gzip .zg

AddEncoding x-compress .zc

AddEncoding deflate .zdNow I placed 4 files in the directory (the encoded files were created with this little utility):

- before.js (not HTTP encoded)

- before.js.zd (deflate encoded – identical to gzip, but without header)

- before.js.zg (gzip encoded)

- before.js.zc (compress encoded)

Now the type-map “before.js.var”:

URI: before.js.zd

Content-Type: application/x-javascript; qs=0.9

Content-Encoding: deflate

URI: before.js.zg

Content-Type: application/x-javascript; qs=0.8

Content-Encoding: x-gzip

URI: before.js.zc

Content-Type: application/x-javascript; qs=0.7

Content-Encoding: x-compress

URI: before.js

Content-Type: application/x-javascript; qs=0.6So what this gives us is already useful. When the browser requests before.js.var, Apache returns one of the files that (a) is encoded in a format accepted by the browser, and (b) the particular file with the highest qs value. If the browser is Firefox, that will be “before.js.zd” (the deflated version). Apache will also send the necessary Content-Encoding header so FF can decode it, and the Vary header to help caches understand that various versions exist at this URL and what you get depends on the Accept-Encoding headers sent in the request.

The Content-Encoding lines in the type-map tell Apache to look out for these encodings in the Accept-Encoding request header. E.g. Firefox accepts only gzip or deflate, so “Content-Encoding: x-compress” tells Apache that Firefox can’t accept before.js.zc. If you strip out the Content-Encoding line from “before.js.zc” and give it the highest qs, Apache will dutifully send it to Firefox, which will choke on it. The “x-” in the Content-Encoding lines and AddEncoding directives is used to negotiate with older browsers that call gzip “x-gzip”. Apache understands that it also has to report this encoding the same way.

Sending other desired headers

I’d like to add the charset to the Content-Type header, and also some headers to optimize caching. The following .htaccess snippet removes ETags and adds the far-off expiration headers:

# Below we remove the ETag header and set a far-off Expires

# header. Since clients will aggressively cache, make sure

# to modify the URL (querystring or via mod_rewrite) when

# the resource changes

# remove ETag

FileETag None

# requires mod_expires

ExpiresActive On

# sets Expires and Cache-Control: max-age, but not "public"

ExpiresDefault "access plus 1 year"

# requires mod_headers

# adds the "public" to Cache-Control.

Header set Cache-Control "public, max-age=31536000"Adding charset was a bit more tricky. The type-map docs show the author placing the charset in the Content-Type lines of the type-map, only this doesn’t work! The value sent is actually just the original type set in Apache’s “mime.types”. So to actually send a charset, you have to redefine type for the js extension (I added in CSS since I’ll be serving those the same way):

# Necessary to add charset while using type-map

AddType application/x-javascript;charset=utf-8 js

AddType text/css;charset=utf-8 cssNow we have full negotiation based on the browser’s Accept-Encoding and are sending far-off expiration headers and the charset. Try it.

Got old IE version users?

This config trusts Apache to make the right decision of which encoding a browser can handle. The problem is that IE6 before XPSP1 and older versions lie; they can’t really handle encoded content in some situations. HTTP_Encoder::_isBuggyIe() roots these out, but Apache needs help. With the help of mod_rewrite, we can sniff for the affected browsers and rewrite their requests to go to the non-encoded files directly:

# requires mod_rewrite

RewriteEngine On

RewriteBase /tests/encoding-negotiation

# IE 5 and 6 are the only ones we really care about

RewriteCond %{HTTP_USER_AGENT} MSIE\ [56]

# but not if it's got the SV1 patch or is really Opera

RewriteCond %{HTTP_USER_AGENT} !(\ SV1|Opera)

RewriteRule ^(.*)\.var$ $1 [L]Also worth mentioning is that, if you figure out the browser encoding elsewhere (e.g. in PHP while generating markup), you can link directly to the encoded files (like before.js.zd) and they’ll be served correctly.

No mod_headers

If you’re on a shared host w/o mod_headers enabled (like mrclay.org for now), you’ll just have to accept what mod_expires sends, i.e. the HTTP/1.1 Cache-Control header won’t have the explicit “public” directive, but should still be quite cache-able.

Minifying Javascript and CSS on mrclay.org

Update: Please read the new version of this article. It covers Minify 2.1, which is much easier to use.

Minify v2 is coming along, but it’s time to start getting some real-world testing, so last night I started serving this site’s Javascript and CSS (at least the 6 files in my WordPress templates) via a recent Minify snapshot.

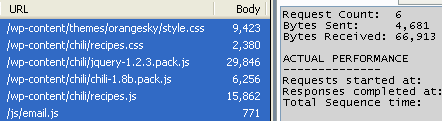

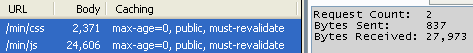

As you can see below, I was serving 67K over 6 requests and was using some packed Javascript, which has a client-side decompression overhead.

Using Minify, this is down to 2 requests, 28K (58% reduction), and I’m no longer using any packed Javascript:

Getting it working

- Exported Minify from svn (only the /lib tree is really needed).

- Placed the contents of /lib in my PHP include path.

- Determined where I wanted to store cache files (server-side caching is a must.)

- Gathered a list of the JS/CSS files I wanted to serve.

- Created “min.php” in the doc root:

// load Minify require_once 'Minify.php'; // setup caching Minify::useServerCache(realpath("{$_SERVER['DOCUMENT_ROOT']}/../tmp")); // controller options $options = array( 'groups' => array( 'js' => array( '//wp-content/chili/jquery-1.2.3.min.js' ,'//wp-content/chili/chili-1.8b.js' ,'//wp-content/chili/recipes.js' ,'//js/email.js' ) ,'css' => array( '//wp-content/chili/recipes.css' ,'//wp-content/themes/orangesky/style.css' ) ) ); // serve it! Minify::serve('Groups', $options);(note: The double solidi at the beginning of the filenames are shortcuts for

$_SERVER['DOCUMENT_ROOT'].) - In HTML, replaced the 4 script elements with one:

<script type="text/javascript" src="/min/js"></script>(note: Why not “min.php/js”? Since I use MultiViews, I can request min.php by just “min”.)

- and replaced the 2 stylesheet links with one:

<link rel="stylesheet" href="/min/css" type="text/css" media="screen" />

At this point Minify was doing its job, but there was a big problem: My theme’s CSS uses relative URIs to reference images. Thankfully Minify’s CSS minifier can rewrite these, but I needed to specify that option just for style.css.

I did that by giving a Minify_Source object in place of the filename:

// load Minify_Source

require_once 'Minify/Source.php';

// new controller options

$options = array(

'groups' => array(

'js' => array(

'//wp-content/chili/jquery-1.2.3.min.js'

,'//wp-content/chili/chili-1.8b.js'

,'//wp-content/chili/recipes.js'

,'//js/email.js'

)

,'css' => array(

'//wp-content/chili/recipes.css'

// style.css has some relative URIs we'll need to fix since

// it will be served from a different URL

,new Minify_Source(array(

'filepath' => '//wp-content/themes/orangesky/style.css'

,'minifyOptions' => array(

'prependRelativePath' => '../wp-content/themes/orangesky/'

)

))

)

)

);Now, during the minification of style.css, Minify prepends all relative URIs with ../wp-content/themes/orangesky/, which fixes all the image links.

What’s next

This is fine for now, but there’s one more step we can do: send far off Expires headers with our JS/CSS. This is tricky because whenever a change is made to a source file, the URL used to call it must change in order to force the browser to download the new version. As of this morning, Minify has an elegant way to handle this, but I’ll tackle this in a later post.

Update: Please read the new version of this article. It covers Minify 2.1, which is much easier to use.