Modern application design is solved! OK, well we at least have a set of camps with their own principles, tools, and paradigms leading us toward the light. One might be “build some components, wire them up with references to each other, and let them talk to each other.” We love/hate static typing, but it allows tools to reason about really large programs.

All that is great, but I feel like plugin system design is in the wild west. To be clear, I mean dropping a new directory of code in place, performing some ritual, and the system behaving radically differently. Of course, we’re getting better at this as apps learn from each other, but I feel like our principles and tools aren’t particularly well matched to this goal of plugins being able to cooperate on a deep level to build up a system.

Allowing plugins to provide APIs introduces a big set of challenges. Just a few:

- How do we conditionally use API’s if they’re available?

- How can a plugin decorate/filter the API’s output?

- How can a plugin replace the API with its own?

- If two plugins want to replace the API, which “wins”?

- How can a plugin remove part of the behavior/API of another plugin?

- How much can plugins detect about each other?

Most robust plugin systems solve these with event systems and some mechanism of ordering plugins to solve disputes (all unique).

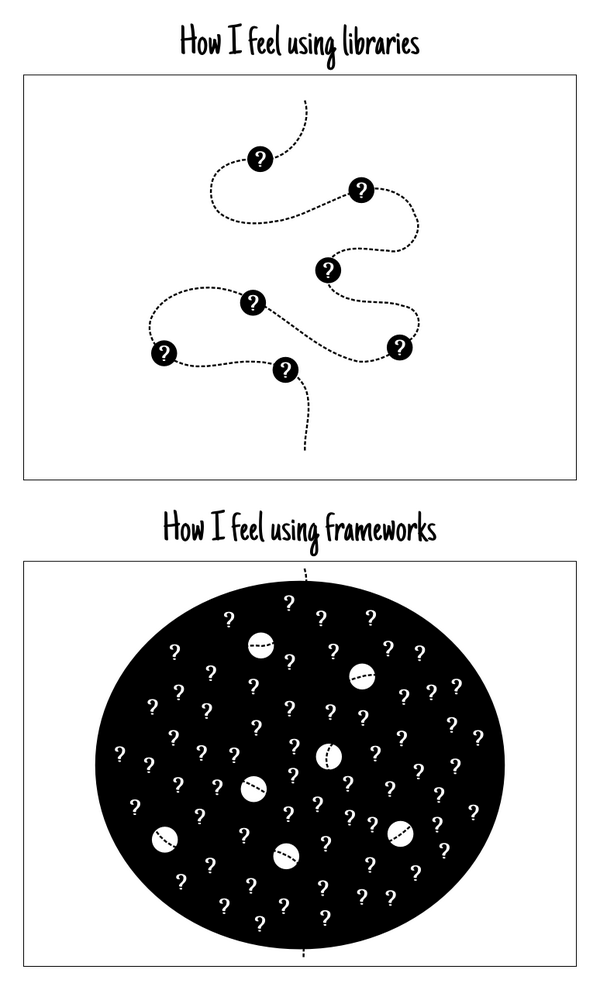

A big problem is that events are almost always run-time linking based on strings. Hence it’s very difficult for tools and humans to reason about which listeners will be called, in what order, and what data will flow to each listener and be returned to the dispatching party. Ideally IDEs could sense all this stuff, and help wire up new listeners and events. Instead, devs on a plugin-heavy system must do string searching on event names or at best use some reporting tool built into the event system.

Symfony’s typed event objects and Ruby’s symbols probably help here. Drupal has a strong convention both in code and docs that helps, and is popular enough for toolmakers to focus on it. Middleware systems as in Express (node) and Stack (PHP) offer a formal way to compose applications, which is pretty exciting, but I’m not sure they solve any of the above problems of plugins tightly collaborating on processes.

What’s the future look like here, and what’s the way toward it? Standardizing on a single event system seems unlikely, but what are the best and most powerful ones out there? What language features would make this easier? Can someone stop me from diving deep into Event-driven programming literature?