(NSFW)

Patent Absurdity

Don’t miss Patent Absurdity, a free half-hour documentary that “explores the case of software patents and the history of judicial activism that led to their rise, and the harm being done to software developers and the wider economy.”

When you open the page, the embedded video begins without human interaction, a violation of an Eolas patent. British Telecom tried to patent the hyperlink that took you to the page. The page probably results in transmission of a JPEG to your computer, a violation of a Forgent Networks patent. The browser you’re using is free in part because of the many patent-unencumbered open-source libraries and concepts its built upon: The concept of the “window” and the “tab”, the libraries that parse HTML, CSS, and Javascript and compress those resources over the wire; the TCP, IP, and HTTP protocols that made the internet bloom world-wide. The OS clipboard (“copy/paste”) that helped developers to build and reuse those libraries.

Had the modern interpretation of software patent law existed in the 60s, our computers, and the state of technology in general, might be very different. The clumsy technology in “Brazil” comes to mind.

With so much of the world’s economy and productivity now tied to software, the proliferation of software patents and worse—areas where those laws can apply—threatens to severely stifle innovation and funnel ever more of our resources into the pockets of law firms and of patent-trolling organizations that exist simply to extort from others.

Bash script: recursive diff between remote hosts

This script will generate a recursive, unified diff between the same path on two remote servers. You set the CONNECT1 and CONNECT2 variables as necessary to point to your hosts/paths. Of course, the users you connect as must have read access to the files/directories you’re accessing.

#!/bin/bash

# USAGE: ./sshdiff DIRECTORY

#

# E.g. ./sshdiff static/css

# Generates a recursive diff between /var/www/css/static/css

# on two separate servers.

TEMP1=~/.tmp_site1

TEMP2=~/.tmp_site2

CONNECT1="user1@hostname1:/var/www/$1"

PORT1=22

CONNECT2="user2@hostname2:/var/www/$1"

PORT2=22

mkdir "$TEMP1"

mkdir "$TEMP2"

scp -rq -P "$PORT1" "$CONNECT1" "$TEMP1"

scp -rq -P "$PORT2" "$CONNECT2" "$TEMP2"

echo -e "\n\n\n\n\n"

clear

diff -urb "$TEMP1" "$TEMP2"

rm -r "$TEMP1"

rm -r "$TEMP2"

Magnetar on This American Life

Today I heard the tail end of a fantastic This American Life episode based on a ProRepublica story on the hedge fund Magnetar. The short version is that, when the housing market started to appear unstable in 2005, Magnetar realized that bad incentives at investment banks would allow it to make money by creating a resurgence of CDO investments in the housing market, prolonging the inflation of the housing bubble.

The bad incentives were that investment bankers were paid immediately in handsome fees for creating and selling these CDOs without the requirement of having “skin the game” when they might later became worthless. When the CDO collapses, the bank (and, through bailouts, the taxpayer) takes the loss, but the banker is long gone.

Magnetar made a name on buying the riskiest layer of CDOs, giving other investors the impression that they were a safe investment and causing the CDO market—and connected investments into the housing market—to take off again (when it could have otherwise returned to Earth slowly and less destructively). What was not made clear to CDO investors—and some say this constituted criminal activity by CDO managers—was the fact that Magnetar was simultaneously placing large bets against the same CDOs and actively encouraging banks to create them from much riskier loans.

I.e. Magnetar’s CDO investments were designed to allow future losses, but to temporarily pay the bills and give false information to the market, allowing Magnetar to later gain tremendously on its eventual downturn. Had the CDO creators and dealers passed on this information (by having incentive to do so), the investors would’ve realized these things were being designed to fail and stayed out of them.

Fire Jimi

Flipping through the radio I heard “Fire” and thought, “could I get this without guitar?” Of course, the answer is yes. And I like it.

Alex and Elliott

“Thank You Friends” was the first Big Star song I heard, hanging out with Dan Francke listening to a Time-Life cassette comp he ripped from his dad.

Elgg, ElggChat, and Greener HTTP Polling

At my new job, we maintain a site powered by Elgg, the PHP-based social networking platform. I’m enjoying getting to know the system and the development community, but my biggest criticisms are related to plugins.

On the basis of “keeping the core light”, almost all functionality is outsourced to plugins, and you’ll need lots of them. Venturing beyond the “core” plugins—generally solid, but often providing just enough functionality to leave you wanting—is scary because generally you’re tying 3rd-party code into the event system running every request on the site. Nontrivial plugins have to provide a lot of their own infrastructure and this seems to make it more likely that you’ll run into conflict bugs with other plugins. With Elgg being a small-ish project, non-core plugins tend to end up not well-maintained, which makes the notion of upgrading to the latest Elgg version a bit scary when there have been API changes. Then there’s the matter of determining in what order your many plugins sit in the chain; order can mean subtle differences in processing and you just have to shift things around hoping to not break something while fixing something else. Those are my initial impressions anyway, and no doubt many other open source systems relying heavily on plugins have these problems. There’s a lot of great rope to hang yourself with.

Jeroen Dalsem’s ElggChat seems to be the slickest chat mod for Elgg. Its UI more or less mirrors Facebook’s chat, making it instantly usable. It’s a nice piece of work. Now for the bad news (as of version 0.4.5):

- Every tab of every logged in user polls the server every 5 or 10 seconds. This isn’t a design flaw—all web chat clients must poll or use some form of comet (to which common PHP environments are not well-suited)—but other factors make ElggChat’s polling worse than it needs to be:

- Each poll action looks up all the user’s friends and existing chat sessions and messages and returns all of that in every response. If the user had 20 friends, a table containing all 20 of them would be generated and returned every 5 seconds. The visible UI would also become unwieldy if not unusable.

- The poll actions don’t use Elgg’s “action token” system (added in 1.6 to prevent CSRFs). This isn’t much of a security flaw, but in Elgg 1.6 it fills your httpd logs with “WARNING: Action elggchat/poll was called without an action token…” If you average 20 logged in users browsing the site, that’s 172,800 long, useless error log entries (a sea obscuring errors you want to see) per day. Double that if you’re polling at 5 seconds.

- The recent Elgg 1.7 makes the action tokens mandatory so the mod won’t work at all if you’ve upgraded.

- Dalsem hasn’t updated it for 80 days, I can’t find any public repo of the code (to see if he’s working on it), and he doesn’t respond to commenters wondering about its future.

The thought of branching and fixing this myself is not attractive at the moment, for a few reasons (one of which being our site would arguably be better served by a system not depending on the Elgg backend, since we have content in other systems, too), but here are some thoughts on it.

Adding the action token is obviously the low hanging fruit. I believe I read Facebook loads the friends and status list only every 3 minutes, which seems reasonable. That would cut most of the poll actions down to simply maintaining chat sessions. Facebook’s solution to the friends online UI seems reasonable: show only those active, not offline users.

“Greener” Polling

Setting aside the ideal of comet connections, one of the worst aspects of polling is the added server load of firing up server-side code and your database for each of those extra (and mostly useless) requests. A much lighter mechanism would be to maintain a simple message queue via a single flat file, accessible via HTTP, for each client. The client would simply poll the file with a conditional XHR GET request and the httpd would handle this with minimal overhead, returning minimal 304 headers when appropriate.

In its simplest form, the poll file would just be an alerting mechanism: To “alert” a client you simply place a new timestamp in its poll file. On the next poll the client will see the timestamp change and immediately make another XHR request to fetch the new data from the server-side script.

Integrating this with ElggChat

In ElggChat, clicking a user creates a unique “chatsession” (I’m calling this “CID”) on the server, and each message sent is destined for a particular CID. This makes each tab in the UI like a miniature “room”, with the ability to host multiple users. You can always open a separate room to have a side conversation, even with the same user.

In the new model, before returning the CID to the sender, you’d update the poll files of both the sender and recipient, adding the CID to each. When the files are modified, you really need to keep only a subset of recent messages for each CID. Just enough to restore the chat context when the user browses to a new page. The advantage is, all the work of maintaining the chat sessions and queues is only done when posts are sent, never during the many poll requests.

Since these poll files would all be sitting in public directories, their filenames would need to contain an unguessable string associated with each user.

Hooray For Tuesdays

A.V. Undercover: The Onion’s A.V. Club invites 25 bands to record 25 covers, released each Tuesday.

Ted Leo makes a nice start with “Everybody Wants to Rule the World” (hat tip). I kinda see it going down hill from here but the cover choices look good.

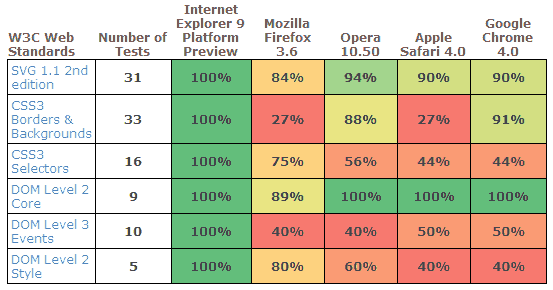

IE9 May Raise the Bar

Wow.

IE9 is coming, and it looks like it’ll get Microsoft back in the game. Full Developer Guide.

The Good: New standards supported, hardware-accelerated canvas, SVG, Javascript speed on par with the other browsers, preview installs side-by-side with IE.

The Bad: Not available on XP and no guarantee it will be. XP users will be stuck with, you know, all the other browsers that run their demos fine.

It could always be worse

OccasionallyVery infrequently, with help from my caffeine addiction and Intense Focus On Writing Awesome Code For Employers Who May Read This, empty Coke Zero cans will slowly accumulate in my vicinity. I couldn’t say how many. In the worst of times enough to not want to know how many.

This morning I stumbled across a 1995 photo of Netscape programmer Jamie Zawinski‘s cubicle, or the “Tent of Doom“.

The awareness of more severe dysfunction in others is a cold comfort.